Temporal Table is one of the best features available in SQL Server 2016. It actually provides your data stored in the table at any point in time. In other words, if you execute any update or delete statement on a table then the old data will be over written and after that if you query the table you will get the latest data (after update or delete), but using temporal table you can view the latest data as well as old data but how ? Let me explain how it works first.

- How Temporal Table works ?

- What happens when you Insert record(s) in Temporal Table ?

When you insert the data in temporal table, the data will remain in temporal table like a normal table but it will NOT affect the history table as shown below.

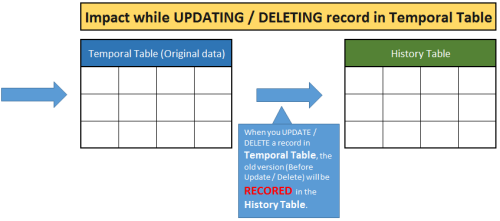

- What happens when you Update/Delete record(s) in Temporal Table ?

When you update/delete the data in temporal table, the existing records will be MOVED FIRST into the history table to record the changes before the data is changed in the temporal table.

- What happens when you Query record(s) in Temporal Table ?

When you Query the records from the temporal table, Temporal table is smart enough to decide whether to return the data from the temporal table or from the history table, you do NOT have to apply any joins or any sub query between temporal table and history table.

- Create Temporal Table

When you create a temporal table it automatically creates a history table (if you already have an existing history table you can link it to temporal table). As you can see below is a usual table creation script with additional columns. These additional column (fields) are specific for temporal table period definition and it will be hidden as well. So when you query the table these columns will not appear in the result. Also, I turned on the system versioning and declared the history table name “dbo.tbl_Product_History”, in this case the temporal table will create the history table as defined. If you do not declare the history table name, SQL will create a history table for the temporal table by default.

CREATE DATABASE SampleDB GO USE SampleDB GO --DROP TABLE tbl_Product --GO CREATE TABLE tbl_Product ( Product_ID int NOT NULL PRIMARY KEY CLUSTERED, Product_Name varchar(50) NOT NULL, Rate numeric(18,2), /*Temporal Specific Fields - Period Definition */ [Valid From] datetime2 GENERATED ALWAYS AS ROW START HIDDEN NOT NULL, [Valid Till] datetime2 GENERATED ALWAYS AS ROW END HIDDEN NOT NULL, PERIOD FOR SYSTEM_TIME ([Valid From] ,[Valid Till]) ) WITH /* Temporal Specific - System-Versioning Configuration*/ (SYSTEM_VERSIONING = ON (HISTORY_TABLE = dbo.tbl_Product_History, DATA_CONSISTENCY_CHECK = ON) ); GO

- Data Manipulation Language (DML) Statements on Temporal Table ?

Lets execute some Data Manipulation (DML) Statement on temporal table and view the impact on temporal table as well as history table.- INSERT STATEMENT

As I explained earlier, when you execute INSERT statement on temporal table there will be NO Impact on history table. Lets execute INSERT statements and observe the results accordingly.

USE SampleDB GO INSERT INTO dbo.tbl_Product VALUES (1,'Product A', 300) ,(2,'Product B', 400) GO SELECT * FROM tbl_Product GO SELECT * FROM dbo.tbl_Product_History GO

- UPDATE STATEMENT

As I explained earlier, when you execute UPDATE statement on temporal table then the OLD version of data will be moved to history table and temporal table will have latest data ONLY. Lets execute UPDATE statements and observe the results accordingly.

USE SampleDB GO UPDATE tbl_Product SET Rate =Rate/2 WHERE Product_ID IN (1,2) GO SELECT * FROM tbl_Product GO SELECT * FROM dbo.tbl_Product_History GO

- DELETE STATEMENT

As I explained earlier, when you execute DELETE statement on temporal table then the OLD version of data will be moved to history table and temporal table will have latest data ONLY. Lets execute DELETE statement and observe the results accordingly.

USE SampleDB GO DELETE FROM tbl_Product WHERE Product_ID = 2 GO SELECT * FROM tbl_Product GO SELECT * FROM dbo.tbl_Product_History GO

- SELECT STATEMENT

Select Statement is interesting in temporal table because it knows what you exactly want from temporal table and it internally links to history table to fulfill your requirement. Given below are some queries that we will run on temporal table and you will observe that temporal table (NOT history table) will return the current state and earlier state of the table as well. Isn’t in it amazing ?

Lets browse the TEMPORAL TABLE and it will show the latest state of the table like a NORMAL TABLE.

USE SampleDB GO --Current State of the table SELECT * FROM tbl_Product GO

Lets browse the TEMPORAL TABLE FOR SYSTEM_TIME ‘2015-06-27 21:33:50.9002439’. It will give you the state of the table at ‘2015-06-27 21:33:50.9002439’ and you will be shocked to see that temporal table returned the result what was available exactly BEFORE the UPDATE statements. Wow !!!

USE SampleDB GO SELECT * FROM tbl_Product FOR SYSTEM_TIME AS OF '2015-06-27 21:33:50.9002439' GO

Lets browse the TEMPORAL TABLE FOR SYSTEM_TIME at ‘2015-06-27 21:43:31.2982847’. You will be AGAIN shocked to see that temporal table returns the result what was available exactly available BEFORE the DELETE statements. Wow !!!

USE SampleDB GO SELECT * FROM tbl_Product FOR SYSTEM_TIME AS OF '2015-06-27 21:43:31.2982847' GO

Conclusion :

I quickly reviewed temporal table and found it very interesting and exciting. I am sure it will change the way databases will be designed specially data warehouses because now we do not need to create separate audit tables to record each state manually by using stored procedure or triggers etc while TEMPORAL TABLE is doing it for us behind the scene automatically.

Let me know your experience about temporal table.

Reference : Channel9